Court Documents Allege Social Media Giants Knew Platforms Addicted Teens, Targeted Them Anyway

This piece is freely available to read. Become a paid subscriber today and help keep Mencari News financially afloat so that we can continue to pay our writers for their insight and expertise.

Today’s Article is brought to you by Empower your podcasting vision with a suite of creative solutions at your fingertips.

Internal documents from Meta, TikTok, YouTube, and Snapchat allegedly reveal the companies knowingly designed addictive features targeting teenagers while blocking safety measures that could reduce engagement, according to media reports on a California court case cited by Australia’s Communications Minister.

Australian Communications Minister Annika Wells highlighted allegations from a legal claim filed in the Northern District of California during a December 3 National Press Club address, using the case to justify Australia’s forthcoming ban on social media for children under 16.

The court filings, as reported in media coverage, include internal company documents that allegedly show social media platforms deliberately pursued young users despite internal research suggesting their products could harm children and adolescents.

Truth matters. Quality journalism costs.

Your subscription to Mencari directly funds the investigative reporting our democracy needs. For less than a coffee per week, you enable our journalists to uncover stories that powerful interests would rather keep hidden. There is no corporate influence involved. No compromises. Just honest journalism when we need it most.

Not ready to be paid subscribe, but appreciate the newsletter ? Grab us a beer or snag the exclusive ad spot at the top of next week's newsletter.

The Alleged Internal Evidence

Media reports on the California case describe internal documents from each of the four major platforms revealing corporate knowledge of addiction mechanics and youth targeting strategies.

According to Wells’ address, reports allege Meta “aggressively pursued young users even as its internal research suggested its social media products could be dangerous to kids.” The minister stated that media coverage of the case claims Meta employees proposed multiple safety features but were “blocked by executives who feared new safety features would hamper teens engagement or user growth.”

For Snapchat, Wells cited reports alleging executives admitted that users with “Snapchat addiction have no room for anything else—Snap dominates their life.”

Media reports on YouTube’s internal documents allegedly show employees acknowledged that “driving more frequent daily usage was not well aligned with efforts to improve digital wellbeing,” according to Wells.

An internal TikTok document reportedly recognized that “minors do not have the executive mental function to control their screen time,” Wells told the National Press Club audience.

The “Behavioral Cocaine” Framing

Wells characterized social media algorithms as “behavioral cocaine,” citing the description from an unnamed creator of addictive social media features. She argued that platforms designed features specifically to maximize the time users spend scrolling, with particular focus on teenagers who lack fully developed impulse control.

The minister claimed social media companies have “made billions of dollars from Australian families because we spend two seconds longer than usual on a video while we’re doomscrolling.”

Platform Revenue Model Under Scrutiny

The California allegations center on the business model linking youth engagement to advertising revenue. According to media reports cited by Wells, the court documents suggest platforms “deliberately target teenagers to maximise engagement and drive advertising revenue” despite internal warnings about potential harms.

Wells argued this revenue incentive created a structural conflict between platform profitability and youth safety, with executives allegedly prioritizing engagement metrics over protective features that could reduce time spent on apps.

Global Context and Regulatory Response

The California case represents one front in growing global scrutiny of social media companies’ youth safety practices. Wells noted that “around the world, we are seeing governments, whistleblowers and ordinary citizens fight back” against platform practices affecting children.

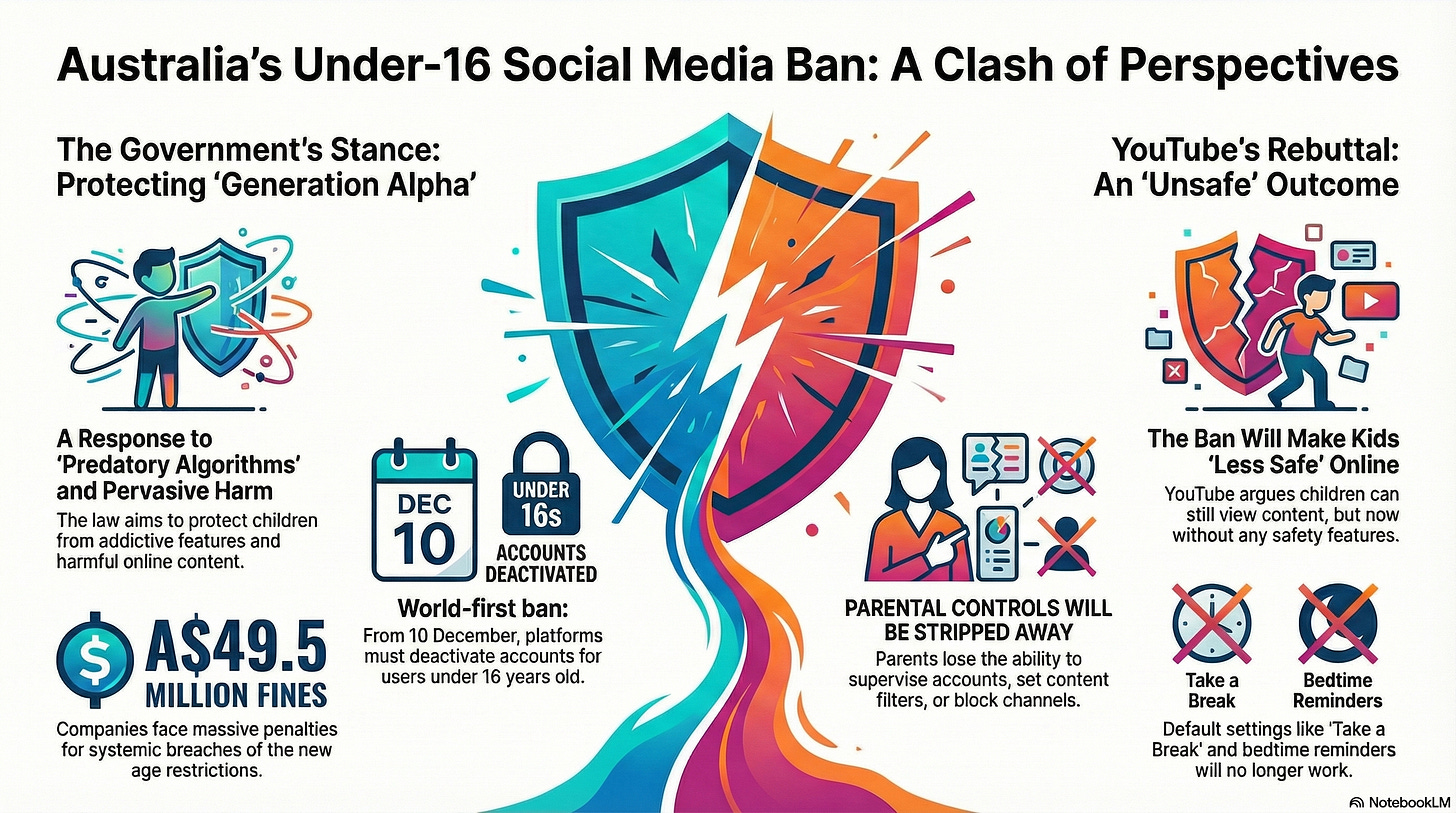

The minister used the alleged internal documents to frame Australia’s December 10 social media ban for under-16s as a response to corporate practices revealed through litigation and whistleblower disclosures.

What the Court Case Reveals

Media reports on the California filing suggest the documents show a pattern of platforms possessing research about youth vulnerability while simultaneously designing features to exploit those vulnerabilities for commercial gain.

The case allegedly demonstrates that social media companies understood the addictive nature of their products—particularly for teenagers whose brains are still developing executive function and impulse control—but chose growth and engagement over implementing safety measures that could protect young users.

Missing Context and Limitations

Wells did not provide direct access to the internal documents or court filings, relying instead on media reports about the California case. The minister did not include responses from Meta, TikTok, YouTube, or Snapchat regarding the allegations, nor did she specify the current status of the legal proceedings.

The platforms named in the case have previously defended their youth safety features and policies in public statements, though Wells did not reference these responses during her address.

Australian Policy Connection

Wells explicitly connected the California allegations to Australia’s regulatory approach, stating that “from 10 December, we start to take that power back for young Australians”—referring to the power platforms currently wield through allegedly addictive design features.

The minister framed the under-16 social media ban as a direct response to the corporate behavior revealed in cases like the California filing, arguing that regulatory intervention became necessary because platforms proved unwilling to prioritize youth safety over engagement and revenue.

What Happens Next

The California case continues to proceed through U.S. courts, potentially producing additional internal documents through the discovery process. Similar litigation in other jurisdictions may reveal further evidence about platform knowledge of youth harms and corporate decision-making around safety features.

Australia’s December 10 ban implementation will provide the first real-world test of whether government regulation can succeed where corporate self-regulation allegedly failed, according to Wells’ framing.

Sustaining Mencari Requires Your Support

Independent journalism costs money. Help us continue delivering in-depth investigations and unfiltered commentary on the world's real stories. Your financial contribution enables thorough investigative work and thoughtful analysis, all supported by a dedicated community committed to accuracy and transparency.

Subscribe today to unlock our full archive of investigative reporting and fearless analysis. Subscribing to independent media outlets represents more than just information consumption—it embodies a commitment to factual reporting.

As well as knowing you’re keeping Mencari (Australia) alive, you’ll also get:

Get breaking news AS IT HAPPENS - Gain instant access to our real-time coverage and analysis when major stories break, keeping you ahead of the curve

Unlock our COMPLETE content library - Enjoy unlimited access to every newsletter, podcast episode, and exclusive archive—all seamlessly available in your favorite podcast apps.

Join the conversation that matters - Be part of our vibrant community with full commenting privileges on all content, directly supporting The Evening Post (Australia)

Catch up on some of Mencari’s recent stories:

It only takes a minute to help us investigate fearlessly and expose lies and wrongdoing to hold power accountable. Thanks!