Australia Releases Guidelines for World's First Under-16 Social Media Ban

Today’s email is brought to you by Empower your podcasting vision with a suite of creative solutions at your fingertips.

Australia published comprehensive regulatory guidelines Tuesday for the world's first ban on social media use by children under 16, giving major tech platforms less than three months to implement systems removing underage users before the law takes effect December 10.

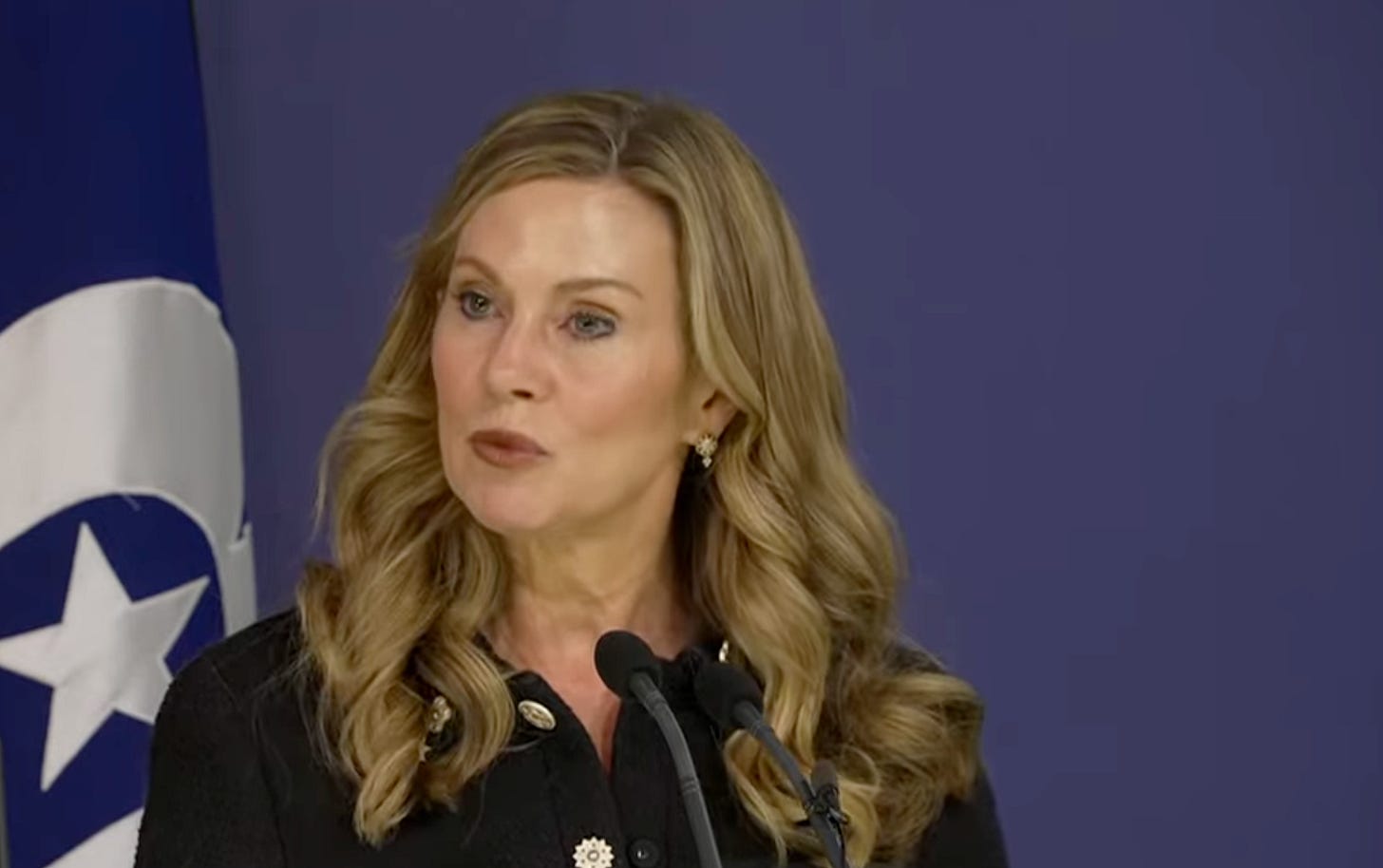

Communications Minister Anika Wells and eSafety Commissioner Julie Inman Grant announced the guidance at a Sydney press conference, outlining how platforms including Meta, TikTok, Google and Snapchat must detect and deactivate accounts of users under 16 while avoiding blanket age verification requirements for all users.

"From December 10, social media platforms will have a responsibility to remove young Australians under the age of 16 from their platforms," Wells said. "With the support of the regulatory guidance being published today, there is no excuse for non-compliance."

The regulations require platforms to take "reasonable steps" to identify underage accounts, prevent re-registration attempts and provide accessible appeals processes. Companies face penalties up to 50 million Australian dollars for systematic failures to comply with the landmark legislation.

Truth matters. Quality journalism costs.

Your subscription to Mencari directly funds the investigative reporting our democracy needs. For less than a coffee per week, you enable our journalists to uncover stories that powerful interests would rather keep hidden. There is no corporate influence involved. No compromises. Just honest journalism when we need it most.

Not ready to be paid subscribe, but appreciate the newsletter ? Grab us a beer or snag the exclusive ad spot at the top of next week's newsletter.

Flexible Technology Approach Emphasized

The 85-page guidance document does not mandate specific age verification technologies, instead recommending a "multi-layered waterfall approach" that allows platforms flexibility while prohibiting government identification or digital ID services as the sole verification method.

"It may be a surprise to some that the guidance does not mandate a single technology approach to insurance or set a required accuracy rating for age estimation," Inman Grant said. "On the contrary, it suggests a multi-layered waterfall approach with the proviso that the provision of government or digital ID can never be the sole or final choice."

The guidance explicitly states platforms should not require all users to verify their age, addressing industry concerns about creating friction for adult users. Wells explained that social media companies already possess extensive behavioral data about long-term users.

"If you have been on, for example, Facebook since 2009, then they know that you're over 16," Wells said. "There's no need to verify it."

Instead, platforms can leverage existing age inference technologies including natural language processing, analysis of login patterns, emoji usage and communication behaviors to identify underage users without broad verification requirements.

Privacy Protections Central to Implementation

Privacy considerations drove much of the guidance development, with eSafety conducting extensive consultations with the Office of the Australian Information Commissioner throughout the process. Additional privacy guidance from federal regulators is expected within weeks.

"Privacy was front of mind in everything we did, and we've been engaging deeply with the Privacy Commissioner and the OAIC on a range of issues," Inman Grant said.

The guidance emphasizes data minimization principles, requiring platforms to use the least invasive methods available and avoid retaining personal information from age verification processes. Companies must focus record-keeping on systems and processes rather than individual user data.

"We want these rules and the delivery of these laws to be as data-minimising as possible to make sure that people's data is as private as possible," Wells said.

Phased Compliance Timeline Outlined

The regulatory framework recognizes that removing existing underage accounts will be more straightforward than preventing new registrations. Platforms must prioritize deactivating current under-16 accounts by December 10, with more complex prevention systems for new users expected to require additional development time.

"We recognize that preventing future under-16s from joining platforms will take longer and be more complex in terms of building systems," Inman Grant said.

The guidance acknowledges that circumvention attempts through virtual private networks and other location-masking technologies are likely, requiring platforms to develop re-verification systems for suspicious account activity.

"We put very specific technical information on how we expect that to happen, but what we also expect the companies to do to mitigate these risks," Inman Grant said. "And when necessary, re-verify the ages of users who may be using VPNs, for example."

Industry Adoption Already Underway

Several major technology companies have begun implementing age estimation systems ahead of the December deadline. Dating platform Tinder announced Monday it would deploy FaceCheck, its proprietary facial age estimation technology. Gaming platform Roblox is using a third-party solution called Persona that was evaluated through Australia's Age Assurance Technology Trial.

Apple announced Tuesday it would introduce more granular age verification capabilities, providing signals to platforms about users being over 13, 16 or 18 years old.

"This morning, Apple also announced that they would be introducing much more granular age attribute information, so signals that they can give to platforms to be sure that they're over 13 or over 16 or over 18," Inman Grant said.

Google published a blog post coinciding with Australia's rule announcement, indicating the company was rolling out age assurance technologies on YouTube in the United States using infrastructure that could be adapted for Australian compliance.

Enforcement Approach Detailed

eSafety will adopt a graduated enforcement approach, beginning with informal engagement before pursuing formal action. However, Inman Grant warned that companies choosing non-compliance would face significant consequences.

"There may be a few who completely decide to do nothing just to test our resolve," Inman Grant said. "And if that is what they plan to do, we will meet them with force."

The enforcement framework will focus on systematic failures rather than individual account oversights. Officials acknowledged that perfect compliance is neither expected nor required on the implementation date.

"We don't expect that every under 16 account is magically going to disappear on December 10th," Inman Grant said. "What we will be looking at is systemic failures to apply the technologies, policies, and processes needed."

Wells emphasized the legislation seeks meaningful change rather than perfection.

"We are not anticipating perfection here," Wells said. "These are world-leading laws, but we are requiring meaningful change through reasonable steps that will see cultural change and a chilling effect that will keep kids safe online."

Global Regulatory Interest Grows

The Australian initiative has attracted international attention, with European Union leaders indicating potential adoption of similar measures. European Commission President Ursula von der Leyen referenced Australia's approach in her recent State of the Union address.

"I've been in touch with the European Commission in response to President Ursula von der Leyen's speech to the State of the Union speech, indicating that they would be working closely with Australia and potentially emulating what we're doing here," Inman Grant said.

Inman Grant drew parallels to the gradual acceptance of facial recognition technology for device security, noting that Apple's Face ID introduction in November 2017 initially faced resistance but became normalized.

"I believe that over time, facial age estimation, liveness tests, and other forms of age assurance will become normalized as well," she said.

Silicon Valley Engagement Planned

eSafety officials will travel to California next week for direct meetings with major technology companies including Apple, Discord, Character AI, OpenAI, Google and Meta. The discussions will focus on technical implementation details and compliance timelines.

"We will be meeting with a number of them in California next week to meet with their technical teams and their compliance teams and those that are rolling this out," Inman Grant said.

The meetings represent part of ongoing industry engagement that began in December 2024, with eSafety conducting consultations with 165 entities during the guidance development process.

"This is really part of the fairness and due diligence work that I think we need to do to make sure that they understand what they're required to do and by when," Inman Grant said.

Platform Self-Assessment Requirements

Companies must submit self-assessments by September 18 determining whether they qualify as age-restricted social media services under the legislation. eSafety will conduct independent evaluations based on final rules published July 29.

Most platforms are claiming they do not qualify as age-restricted services, potentially setting up legal challenges over scope and definitions.

"Most of them are saying they are not," Inman Grant said. "So there may be those who claim to not be and may force a legal fight or may choose to do nothing."

Reasonable Steps Standard Varies by Platform

The "reasonable steps" compliance standard will vary based on platform size, sophistication and resources. Larger companies like Meta, which already operates teen accounts with enhanced protections for users under 16, may face higher expectations than smaller platforms.

"Some of these more advanced sophisticated companies will already have very sophisticated systems in place," Inman Grant said. "We will, of course, make allowance for those that are smaller, or this could become much of a more resource-intensive exercise."

The guidance emphasizes that platforms must implement technologies and systems that are "reliable, accurate, robust, and effective, privacy-preserving, and data-minimizing, and minimally invasive."

Children's Rights Protections Outlined

eSafety simultaneously released a commitment statement on protecting children's digital rights during the implementation process, acknowledging the significant impact on young users and the need for educational resources.

"We know this is going to be a monumental event for a lot of children," Inman Grant said. "A lot of children welcome this, as certainly parents do, but we know this will be difficult for kids."

The agency committed to providing education and resources for children, parents and educators to prepare for the transition, recognizing the legislation represents a fundamental shift in young people's online experiences.

"Children's best interests are at the heart of everything we do at eSafety and we are seeking to strike a delicate balance," Inman Grant said in the published statement.

The legislation positions Australia as the global leader in youth online safety regulation while creating potential precedents for other jurisdictions considering similar age-based restrictions on social media access.

Wells framed the initiative as supporting families against platform interests: "We want kids to know who they are before platforms assume who they are."

The December 10 implementation date will mark the first time any country has comprehensively banned social media access for users under 16, making Australia a closely watched test case for global digital policy.

Sustaining Mencari Requires Your Support

Independent journalism costs money. Help us continue delivering in-depth investigations and unfiltered commentary on the world's real stories. Your financial contribution enables thorough investigative work and thoughtful analysis, all supported by a dedicated community committed to accuracy and transparency.

Subscribe today to unlock our full archive of investigative reporting and fearless analysis. Subscribing to independent media outlets represents more than just information consumption—it embodies a commitment to factual reporting.

As well as knowing you’re keeping Mencari (Australia) alive, you’ll also get:

Get breaking news AS IT HAPPENS - Gain instant access to our real-time coverage and analysis when major stories break, keeping you ahead of the curve

Unlock our COMPLETE content library - Enjoy unlimited access to every newsletter, podcast episode, and exclusive archive—all seamlessly available in your favorite podcast apps.

Join the conversation that matters - Be part of our vibrant community with full commenting privileges on all content, directly supporting The Evening Post (Australia)

Catch up on some of Mencari’s recent stories:

It only takes a minute to help us investigate fearlessly and expose lies and wrongdoing to hold power accountable. Thanks!